Reduce IT/OT Network Traffic

Reduce Latency

Secure Tunnel or Air-Gapped

Real World Testing

Lower Entry Barrier

No Premature Optimization

Resilience & Isolation

Interchangeable Components

Automation with CI/CD

No Vendor Lock-In

Battle Tested

Flexibility

This section is a simple demonstration of what can be implemented on the shop floor with iot|edge across various manufacturing industries. Since we, as humans, are (still) the benchmark for pattern recognition, it will be easy for domain experts to see how iot|edge can be adapted to solve their specific challenges. Of course, all business/custom logic can be developed in the preferred programming language. This reduces translation friction and lowers the entry barrier for contributions. In short, iot|edge serves as an incubator to get innovative solutions into production faster and safer, giving you an edge over the competition.

| Computer Vision | Computer Audio | Timeseries | |

| Condition Monitoring | ✔︎ | ✔︎ | ✔︎ |

| Predictive Maintenance | ✔︎ | ✔︎ | ✔︎ |

| Quality Inspection | ✔︎ | ✔︎ | ✔︎ |

The following custom Grafana dashboard shows real-time image segmentation processed with iot|edge. The images, which belong to the COCO dataset, are acquired from an image stream and then run through the deeplabv3_resnet50 model in PyTorch to predict the segmentation mask. The segmentation mask is shown as an overlay on the raw image on the right side of the dashboard. Beyond this example, imagine applying it to tasks such as detecting defects on surfaces, product packaging, tracking objects on assembly lines, or sorting products based on features.

Of course, the inference can be GPU-accelerated for more demanding workloads. For example, by using an edge device from the Nvidia Jetson family with the Nvidia Container Runtime. Alternatively, by using a Tinybox, if being based and aiming for maximum FLOPS/$.

The following custom Grafana dashboard shows real-time audio classification processed with iot|edge. First, the waveform of the audio signal is processed using the Short-Time Fourier Transform (STFT), which extracts the frequency components over time and converts them into a 2D image (Mel spectrogram). This image is then used as input for the YAMNet model in TensorFlow to predict various classes of noise within a given time frame of the signal. Imagine all the practical applications for Computer Audio. For instance, you could monitor the wear of bearings using their distinct noise for smart maintenance. It could also be used to confirm a process step based on its sound characteristics, such as the click of a lid.

The following customized Grafana dashboard shows a binary classification for an univariate time series processed with iot|edge. The yellow indicator is generated by a custom Python function when a certain threshold is reached. Additionally, a notification is sent via email. The rules (conditions) or logic for inference can be made arbitrarily more complex, for example, by using multivariate sliding or striding windows for single or multi-step predictions. In short, this could support all classic condition monitoring and further predictive maintenance methods.

The following customized Grafana dashboard consolidates multiple real-time visualizations in one central place, processed by iot|edge. By using such a blueprint to monitor the current state of your machines and processes, you can incrementally develop digital shadows on your shop floor. The advantage is that the digital twin can be easily brought to life within iot|edge's architecture by simply inputting simulation data. This makes what-if analysis a breeze while considering real-world latency and throughput constraints. Of course, all use cases discussed above can neatly integrate with a digital twin. E.g., imagine being able to commission new machines entirely virtually.

The following OpenWebUI (with Ollama in the background) demonstrates interaction with user-provided documents/images by leveraging LLMs with Nvidia RTX 4080 acceleration hosted on iot|edge. This setup allows operators to efficiently interact with sensitive or confidential documents, such as work instructions, blueprints, or operating procedures, when the cloud is not an option. Additional benefits could include the translation of industry-specific jargon, to bridge communication gaps and onboard new employees more seamlessly. By improving information accessibility and understanding, LLMs can increase efficiency and support better decision-making.

Especially for LLMs, the inference only makes fun with GPU acceleration and sufficient VRAM. While smaller models can run with as little as 1.3 GB VRAM (Llama 3.2 1B), the model performance increases with its size. For reference, the largest open-source model currently available (Llama 3.1 405B) requires 231 GB of VRAM.

Valkey (formerly Redis) as a memory cache primarily excels due to its abstraction around streams. In particular, the consumer groups for these streams offer a streamlined and robust mechanism to handle synchronous as well as parallel workloads. Furthermore, Valkey decouples technologies and protocols of data sources, data sinks, and workers. This flexibility enables hot reloading of connectors, dispatchers, and workers without disrupting operations.

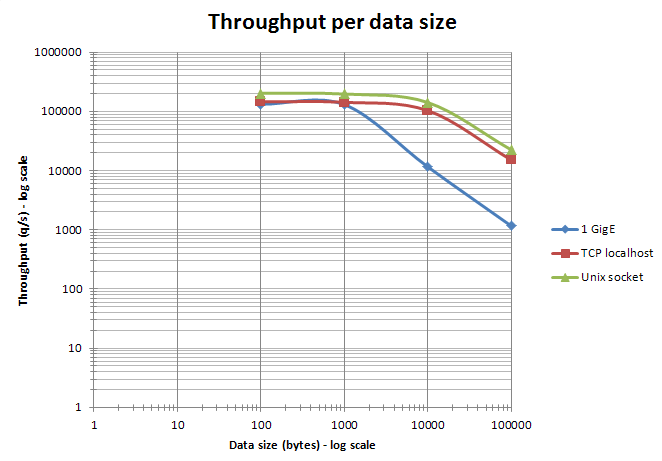

Valkey offers very solid out-of-the-box performance, which can be further optimized by leveraging iot|edge and implementing custom encodings written in Rust tailored towards the specific use case. To address potential bottlenecks caused by high request rates, iot|edge's connectors offer buffering mechanisms. While this might introduce slightly more latency, it significantly increases throughput, especially in the 0.1-10 KB range, ensuring optimal performance even under demanding conditions.

For local disk persistence, such as needed during data sink downtimes, iot|edge extends the native Valkey options by writing to Parquet files — more specifically to Delta Lake with Rust. This means that in addition to persistence with excellent compression, you can already do ad-hoc data analytics using tools like Datafusion or Polars. Of course, the extent of this depends on the resources of the edge device.

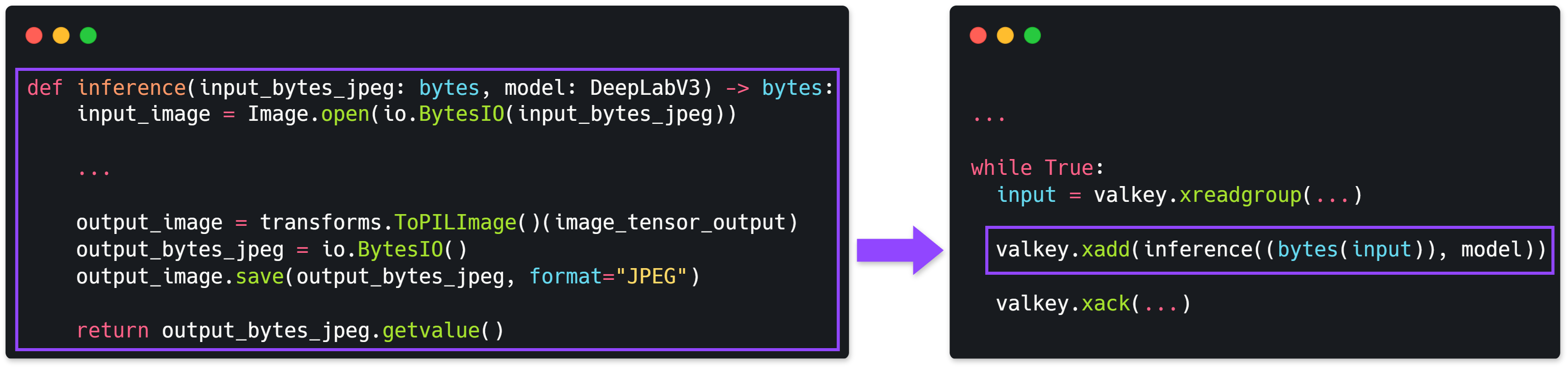

The data science lifecycle starts with data collection, followed by preprocessing, model selection, and training. Once promising results are achieved on test data, data scientists often encounter a major obstacle: securely deploying the model in a real-world manufacturing environment while maintaining a well-governed process — without being bothered by tasks outside their comfort zone. If this challenge sounds familiar, iot|edge is here to help.

iot|edge offers secure, optimized Docker containers that streamline the entire deployment process. You can simply integrate your code — data transformation or AI/ML model — by following the provided function signature as illustrated in the example.

A variety of Python signatures implementing pd.DataFrame, pl.DataFrame, JPEG, PNG, and more come out of the box. Respectively, custom signatures can be added with ease. The deployment and the integration are then managed automatically by a CI/CD pipeline, which can be customized to include steps like code reviews, unit tests and quality gates.

iot|edge makes it easy to monitor model performance by performing A/B tests while running different models simultaneously. This allows you to confidently assess whether new models outperform previous model generations.

To cover the whole spectrum of data science, Python, R and MATLAB are fully compatible. Other based languages such as Rust, C, and C++ can be added with ease.

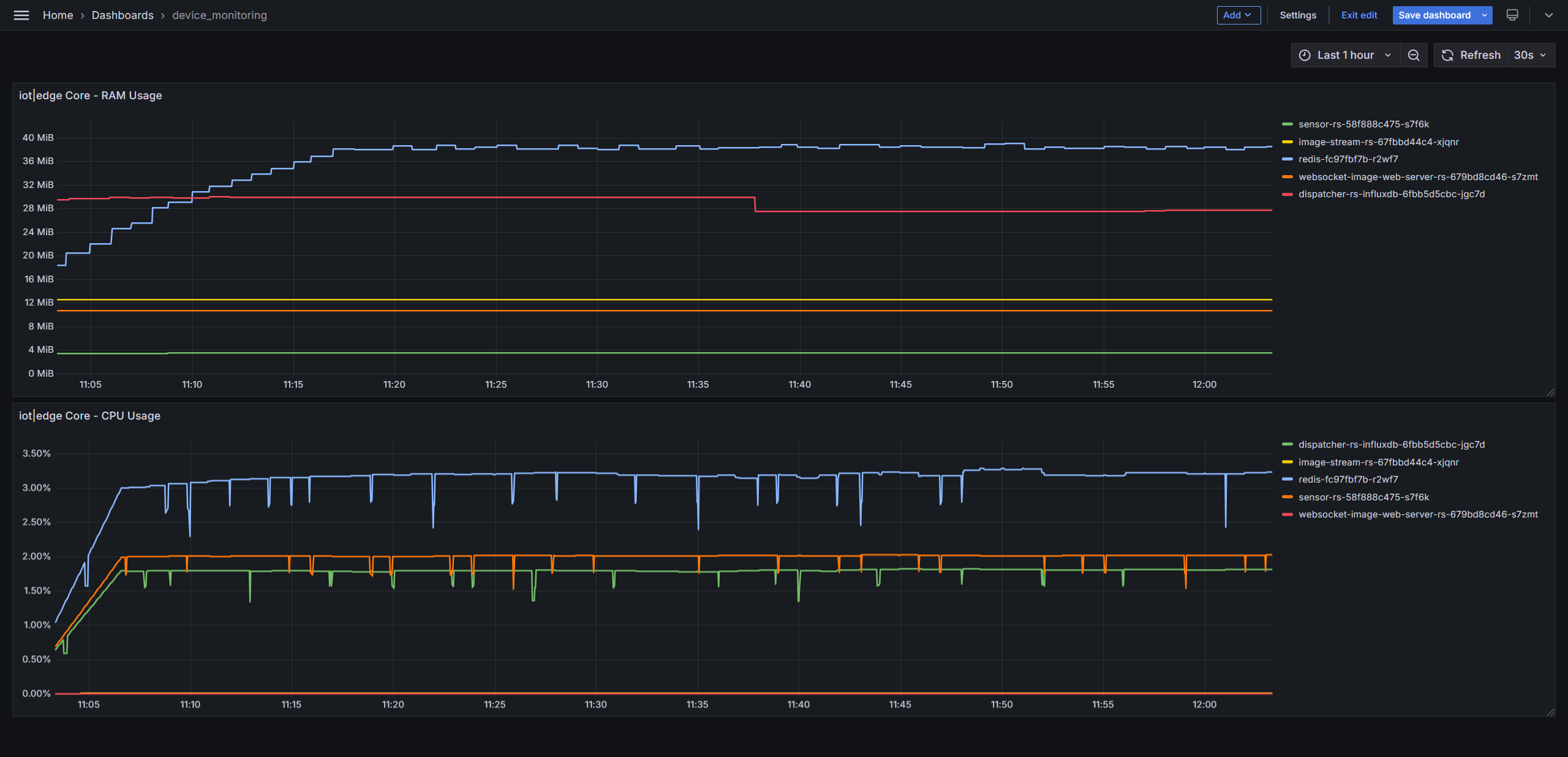

Grafana serves as the visualization backbone for iot|edge. It provides a highly customizable foundation for creating miscellaneous, out-of-the-box visualizations to support all kinds of use cases, as shown above. But equally important it fosters in-depth performance monitoring and service logging for iot|edge through an integrated observability stack, as shown below.

Metrics are managed by Prometheus and logs by Loki. Both are served by Alloy as the telemetry collector. Together, these components provide excellent system observability and simplify performance tuning. It also makes it really easy to track the state of a service or application — for example, to check for out-of-sync images during parallel image processing, or more generally, memory leakage of a service. The latter is extremely useful in combination with the resource limit specifications of Kubernetes, to allow rapid prototyping in the real world without the fear that one service will bring down the whole edge device.

iot|edge can be deployed securely, efficiently, and flexibly from central repositories like GitHub, GitLab, Azure DevOps, etc., to bridge the gap between IT and OT — whether strict isolation or real-time updates are needed.

Our journey began in early 2019 when we first met during a gym session (Mondays, chest day, incline dumbbell bench press, a classic; this is how lifelong bonds are forged). From the very beginning, we shared a similar mindset of fun, curiosity, and competition.

Since we are both passionate about programming and software engineering, we brought the same mindset to those pursuits, which became the foundation of our collaboration. As you can imagine from time to time workouts can become philosophical but equally discussions can become physical, a perfect equilibrium. Over 12 months ago, we started this project, and this website serves as its showcase.

Through our side projects and professional experiences, we cover a wide range of expertise and interests.

But most important!